What is MLOps? – Benefits, Start with MLOps and DevOps vs. MLOps

McKinsey reports AI adopters with a proactive strategy achieve significantly higher profit margins — between 3% and 15% above the industry average.

Today, two-thirds of executives cite AI as vital to the future of their business, with plans to increase investments this year. As a result, IDC reports the global AI market is forecast to accelerate further in 2022 with 18.8% growth and remain on track to break the $500 billion mark by 2024.

MLOps—machine learning operations, or DevOps for machine learning—enables data science and IT teams to collaborate and increase the pace of model development and deployment via monitoring, validation, and governance of machine learning models.

What is MLOps?

MLOps, or machine learning operations, refers to the process and tooling of consistently developing, deploying and maintaining reliable, responsible AI. By applying the broad concepts and principles of DevOps to machine learning, MLOps help organisations understand, manage and scale the holistic data lifecycle through repeatable processes. Like DevOps, MLOps advocates for an emphasis on automation, collaboration and continuous feedback.

MLOps takes the well-known approaches of DevOps and applies it to a line of work that is experimental and complex, and ensures enterprises can leverage data science in a highly compliant and regulated environment. Let us use the finance sector for example, through machine learning, lenders are determining credit scores for customers by mining third-party datasets and delivering these insights in a matter of seconds back to stakeholders.

While this would not surprise many, what happens if someone asks how they landed on a particular credit score? For this approach to be compliant banks must be able to explain and trace a models behaviour in production. This would mean at the very least have the ability to reproduce experiments and artefacts (such as models), versioning of the models, handling bias, creating linage (tracing models right back to the data used for training) and enabling a capability to monitor how models are performing in production. All this, while managing potentially thousands of models. MLOps helps organisations achieve this level of scale within a highly sensitive and governed environment.

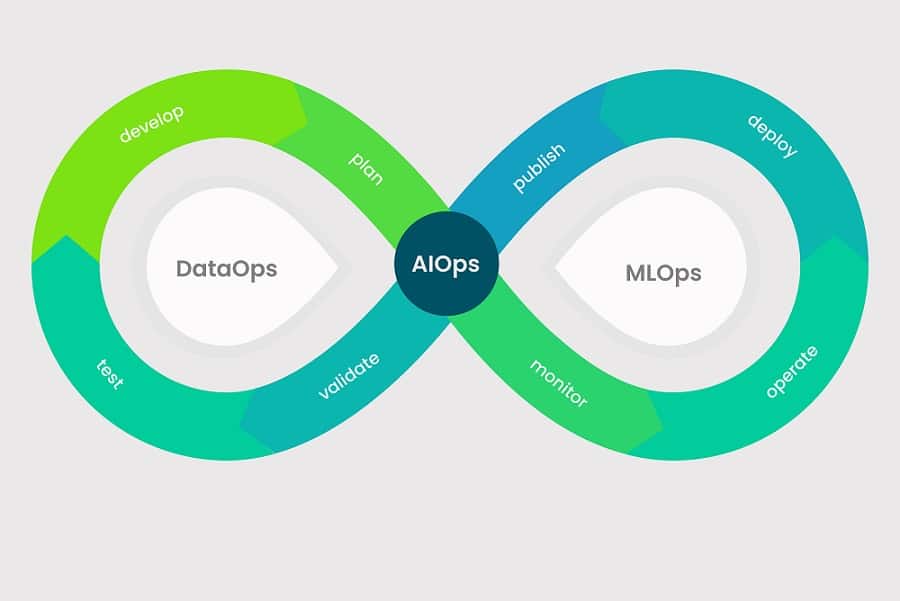

What’s the difference between MLOps and AIOps?

While MLOps is the application of DevOps to improve the development of AI, AIOps is the application of artificial intelligence to improve IT operations.

DataOps and MLOps are different from AIOps. AIOps is a definition term used to describe the usage of AI to improve AI operations along with the business architecture. We know AI is a paradigm shift that allows the ability of machines to solve problems in an IT environment without human assistance or interaction. And with upgrades, AIOps uses machine learning and advanced analytics to automate and solve challenges in real-time for optimized operations across hybrid environments. AIOps supports continuous integration and deployment of machine learning and big data solutions like MLops and DataOps to quickly respond to the changing market demands. The ultimate objective AIOps is to continuously develop and deploy agile applications and continuously improve their digital services and enhance IT Operations over time.

What is DataOps?

DataOps or data-powered operations are for IT operational teams like, data engineers, data researchers, and software developers, who are working to develop data-powered applications or software infrastructure that supports data operations. DataOps involves two main practices:

Data transformation and enrichment: to ensure that every kind of data (structured, semi-structured, and unstructured) is optimally harnessed to drive actionable insights.

Operationalizing the data: from the edge to the cloud, maintaining and monitoring data operations involves the consolidation to the single source of truth, data orchestration, and data governance.

DataOps makes operations related to data analysis and augmentation simply work better, faster, and less expensive. As a term, DataOps has been gradually gaining popularity over the several years now. As its approach is sometimes described as a best practice for businesses that work with continuous analytics and data application development.

Example MLOps platforms:

Uber’s Micheangelo: https://eng.uber.com/michelangelo-machine-learning-platform/

Facebook’s FBLearner: https://engineering.fb.com/core-data/introducing-fblearner-flow-facebook-s-ai-backbone/

Benefits of MLOps

Organizations with MLOps initiatives reap several benefits, including:

- Improved confidence in their model

- Improved compliance with regulatory guidance

- Faster response times to changing environmental conditions

- Lower break-fix cost

- Increased trust and ability to drive valuable insights

These benefits put organizations with MLOps initiatives ahead of the competition as their counterparts continue to struggle packaging, deploying, and maintaining stable model versions. MLOps can help mitigate these challenges while adding more value to the organization with improved quality and better performance.

ML tiered on DevOps – overcoming challenges

To solve issues with manual implementation and deployment of machine learning systems, teams need to adopt modern practices that make it easier to create and deploy enterprise applications efficiently.

MLOps leverages the same principle as DevOps, but with an extra layer of ML model/system.

The modeling code, dependencies, and any other runtime requirements can be packaged to implement reproducible ML. Reproducible ML will help reduce the costs of packaging and maintaining model versions (giving you the power to answer the question about the state of any model in its history). Additionally, since it has been packaged, it will be much easier to deploy at scale. This step of reproducibility provides and is one of several key steps in the MLOps journey. – Neal Analytics

The traditional way of delivering ML Systems is common in many businesses, especially when they are just starting out with ML. Manual implementation and deployment is enough when models are rarely changed. A model might fail when applied to real-world data as it fails to adapt to changes in the environment or changes in the data.

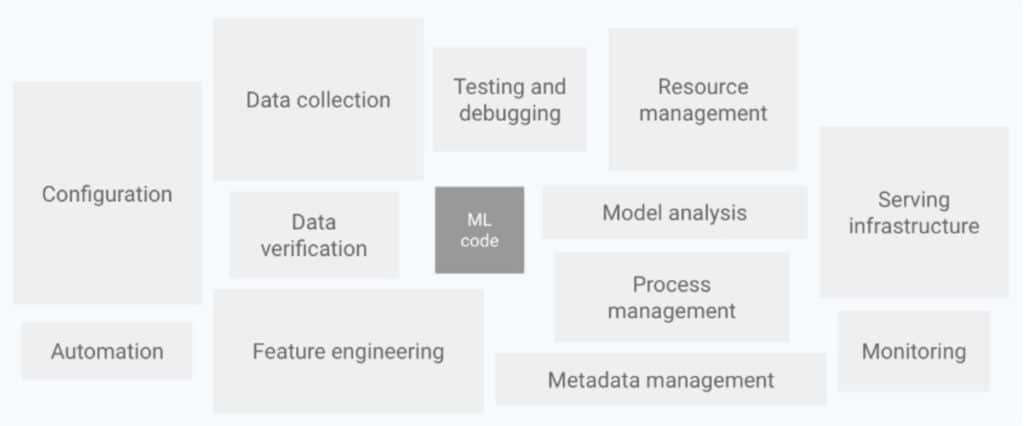

Why is it hard?

Google’s visual description of the ‘The Machine Learning System’ is a great way to appreciate the challenges in building an MLOps architecture.

Figure 1 – Google – The Machine Learning System

Organisations who are starting their AI journey may be solely focussed on the machine learning itself. In the real world, this may look like a Data Scientist working on their own laptop, with their own tools while using notepad or MS Word to document how their experimentation is going. In a Production scenario, not only will this not be suffice, there is now the added challenge in serving infrastructure, monitoring of the models, re-training of the models and process management to name a few. How do you scale your AI initiatives while taking all these extra concerns into account?

Getting started with MLOps

By 2024, Gartner projects 75% of enterprises will shift from piloting to operationalising AI, driving a 5x increase in streaming data and analytics infrastructures. As the number of AI projects in production continues to rise, so will the need for a mature MLOps approach. The sooner your business identifies and adopts the right tools and processes to fit your needs, the better you’ll be positioned to compete in the next few years.

So how do organisations get started?

For those that haven’t begun deploying AI, discussions around MLOps should be included in the earliest stages of planning. Developing a preemptive strategy for operationalisation will enable your teams to start delivering business value faster. For those actively working to put AI into production, investing the time to optimise with MLOps will help to alleviate the obstacles to continuous integration and delivery.

In either case, the first step is to bring all impacted teams together (including data scientists and engineers, infrastructure and DevOps teams, software developers, business analysts, architects and IT leaders) to begin researching, developing and documenting a comprehensive MLOps strategy.

The goal of this documentation is to guide every process and decision throughout the AI lifecycle. While the specifics will vary based on the organisation, scope and skill set, the strategy should clearly define how an ML model will move from stage to stage, designating responsibility for each task along the way. If there are existing process flows in place, these can be used as a starting point to identify challenges and develop solutions.

Building your MLOps strategy is also an opportunity to evaluate the need for MLOps tools. Although tooling options are not quite as extensive as those available for DevOps, there are still platforms that can help you manage the machine learning lifecycle. Prioritise your desired features, capabilities and compatibility with your existing data ecosystem to narrow down the best option for your organisation.

MLOps toolset

MLOps frameworks provide a single place to deploy, manage and monitor all your models. Overall, these tools simplify the complex process and save a great deal of time. While choosing any MLOps Tools, the above features are worth considering before you choose. Enterprises might also be interested in the providers where they allow free trials. Let’s have a look at a few MLOps tools:

- Amazon Sage Maker

- Neptune

- DataRobot

- MLflow

- Kubeflow

- Azure ML

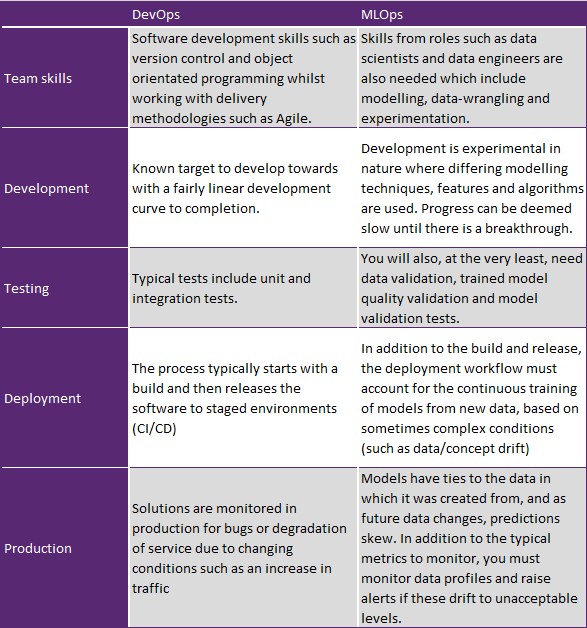

DevOps vs. MLOps

Though there are many similarities between DevOps projects and ML projects, it’s important not to take DevOps practices and techniques and apply them blindly to machine learning projects. The IT team does not have deep expertise on modeling algorithms, and data scientists do not want to manage infrastructure, so it’s important to bridge the gap with ML engineers for implementing MLOps.

The ML Engineer role brings a specialized skillset with the mandate of collaborating with IT and the business to ensure the models are well supported throughout the lifecycle. Aside from skillset, there are key differences in the activities taken when implementing DevOps vs. MLOps.

Skill-sets also play a part in the difficulty to scale. Whilst Data Scientists come from an experimental background, they will typically lack the software engineering practices of a software engineer which includes version control, collaboration, and DevOps practices. Conversely, Software Engineers lack the modelling and analytical skills of a Data Scientist.

The outcome of this skill-set mismatch means for an organisation to get a data science model from Proof-of-Concept into Production it turns into a multi-business-unit affair. This will see multiple hand-offs and meetings, creating delays and loss of context between the team – how would a software engineer know how to handle a deployment issue that is related to the model itself?

Figure 2 – DevOps vs MLOps (based on Google’s 5 dimensions)

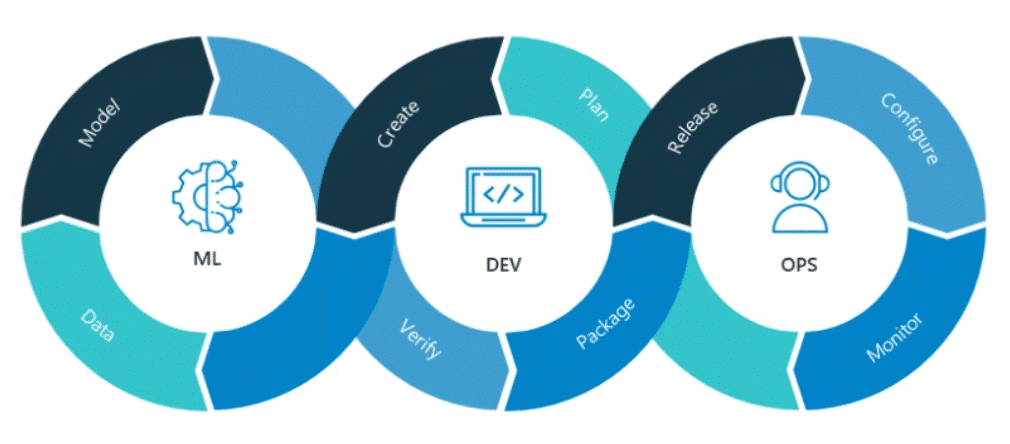

What does MLOps look like?

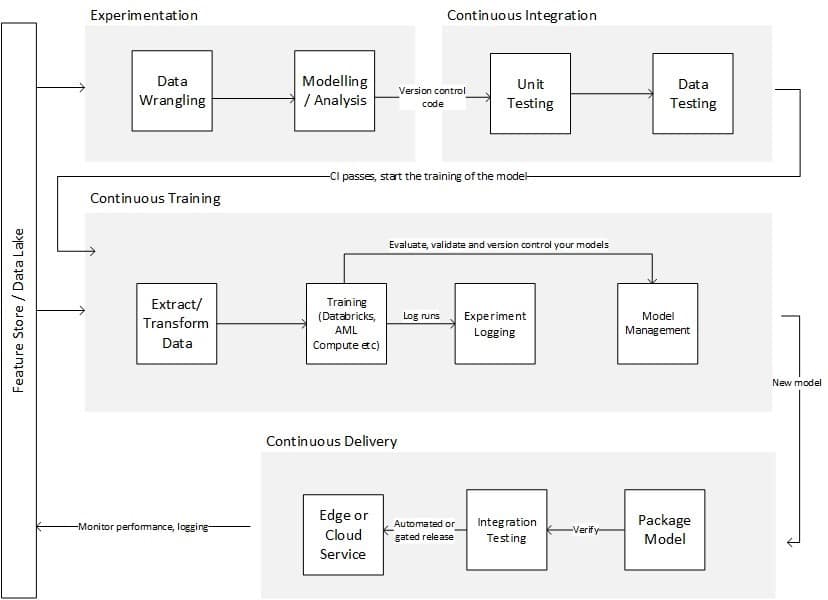

As we take a step lower and dive into the detail, an MLOps workflow may look like the below. Both Microsoft and Google have their own representations of this life-cycle.

Figure 3 – Sample MLOps workflow

The highlight features of an MLOps platform are:

Experimentation. As Machine Learning Engineers experiment over data, their progress is automatically logged (think code artifacts, training accuracy metrics) and can be compared to other runs.

Continuous Integration. As code is checked-in to a code repository, it is run against various unit tests, data tests and integration tests.

Continuous Training. As new data or code artefacts are generated, the training workflow is automatically triggered and run through various tests to understand it’s performance against current models.

Continuous Deployment. As models pass various validity tests, they can be automatically or semi-automatically promoted through various test-prod environments. Models can also be provisioned through various deployment workflows such as Blue-Green Deployment and Canary Deployment.

Monitoring. Models in production must be continuously monitored for auditing requirements and machine learning specific requirements such as concept drift (the drift in input data, causing the prediction to skew). Feedback loops must remain tight, keeping true to the DevOps principles.

Where to from here?

Aligning to a Machine Learning platform will give you the greatest opportunity for success. MLOps platforms have been growing rapidly in support with services such as Azure Machine Learning Services (Microsoft) and MLFlow (Databricks) paving the way. Many of our customers have been mixing these services based on their alignment to open-source or cloud vendors. The key here is to look for services that are interoperable with one another and offer deployment approaches such as containers that can be deployed both in the cloud and on the (intelligent) edge.

At Insight, we’ve had great success in leveraging the technologies of Azure Machine Learning Services, MLFlow, Azure DevOps and Microsoft Teams to create fully automated MLOps workflows that enable organisations to accelerate AI. If you’d like to know more, please reach out! Source