10 Hadoop Hardware Leaders

Hadoop software is designed to orchestrate massively parallel processing on relatively low-cost servers that pack plenty of storage close to the processing power. All the power, reliability, redundancy, and fault tolerance is built into the software, which distributes the data ...

How Accurate is Mahout for Summing Numbers?

A question was recently posted on the Mahout mailing list suggesting that the Mahout math library was "unwashed" because it didn't use Kahan summation. My feeling is that this complaint is not founded and Mahout is considerably more washed than ...

10 Hot Hadoop Startups to Watch in 2026

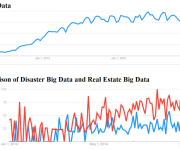

It's no secret that data volumes are growing exponentially. What's a bit more mysterious is figuring out how to unlock the value of all of that data. A big part of the problem is that traditional databases weren't designed for ...

Top 7 Tips to Succeed with Big Data

Today all the businesses are focusing and investing on big data Analytics to offer reliable services and to get profits. Big data is playing vital role in making the better business decisions by enabling data scientists and other users to ...

Using Scala To Work With Hadoop

Cloudera has a great toolkit to work with Hadoop. Specifically it is focused on building distributed systems and services on top of the Hadoop Ecosystem.

http://cloudera.github.io/cdk/docs/0.2.0/cdk-data/guide.html

And the examples are in Scala!!!!

Here is how you you work with generic stuff on the ...

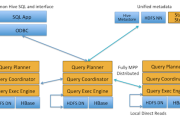

Impala and SQL on Hadoop

The origins of Impala can be found in F1 – The Fault-Tolerant Distributed RDBMS Supporting Google’s Ad Business.

One of many differences between MapReduce and Impala is in Impala the intermediate data moves from process to process directly instead of storing it ...

Selecting the right SQL-on-Hadoop engine to access big data

With SQL-on-Hadoop technologies, it's possible to access big data stored in Hadoop by using the familiar SQL language. Users can plug in almost any reporting or analytical tool to analyze and study the data. Before SQL-on-Hadoop, accessing big data was ...

Configure Eclipse for MapReduce

1. Download load eclipse Europa or Indigo

2. Download Hadoop eclipse plugin eg: hadoop-eclipse-plugin-1.0.3.jar

3. Copy jar in eclipse plugin folder

4. Open eclipse

5. Add Map/Reduce server

6. Add New DFS Location

Location name: localhost

Map/Reduce Master:

port: 9001

DFS Master

port: 9000

Finish

7. New -> others -> Map/Reducer Project

-> ...

Apache Mahout is moving on from MapReduce

Apache Mahout, a machine learning library for Hadoop since 2009, is joining the exodus away from MapReduce. The project’s community has decided to rework Mahout to support the increasingly popular Apache Spark in-memory data-processing framework, as well as the H2O engine for ...

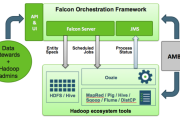

Apache Falcon-Data Governance for Hadoop

Apache Falcon is a data governance engine that defines, schedules, and monitors data management policies. Falcon allows Hadoop administrators to centrally define their data pipelines, and then Falcon uses those definitions to auto-generate workflows in Apache Oozie.

InMobi is one of ...