A Beginners Guide to Learning Apache Kafka From Scratch in 2024

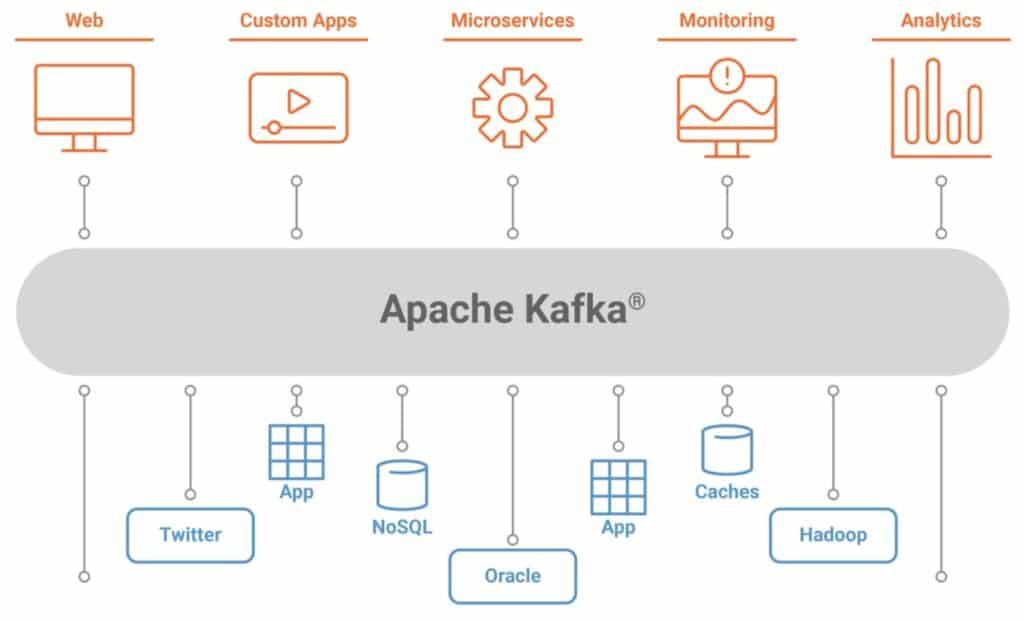

Kafka is becoming an increasingly popular and common word these days. Thousands of companies like Netflix, Airbnb, Microsoft, Target, etc., are built on Apache Kafka. It also fits into the web, custom apps, microservices, monitoring analytics, Twitter, etc.

However, do you actually know what Kafka is? Kafka is a platform that was originally developed by the famous site, LinkedIn. In the past ten years, it has evolved to allow a user to store huge amounts of data, have a message bus, and perform real-time processing on the data that passes through it. In simple terms, Kafka is a commit log that is distributed, horizontally-scalable, and fault-tolerant. Now let us look at all these keywords in detail and learn more about Apache Kafka.

What does “distributed” mean?

A system that is differentiated into several running machines that work together to appear as one single node to the end user is called a distributed system. When we say that Kafka is a distributed system, we mean that it stores, receives, and sends messages on a diverse set of different notes, which are commonly referred to as brokers.

What does “horizontally scalable” mean?

To understand the meaning of this term, users must also be aware of the meaning of vertically-scalable. Let us try to simplify things with the help of an example. If someone has a traditional database server that starts to get overloaded, then vertical scaling or upwards scaling is required. This means we simply have to add more resources (CPU, RAM, SSD, etc.) to the machine or device. Scaling upwards has two main advantages, namely: it doesn’t allow you to upscale indefinitely because hardware defines certain limits. Moreover, it doesn’t usually require any downtime. This is something that big corporations do not have the ability to afford.

Now let us understand the meaning of “horizontally scalable.” It involves solving a similar problem by throwing more machines at it. Such an addition eliminates the need for any downtime. Also, there are no limits on the number of machines you can put in your cluster. But the catch is that not all systems have the ability to support horizontal scalability as they are not formatted to work in a cluster.

What is fault-tolerant?

Non-distributed systems have a single point of failure, often abbreviated as “SPoF.” If your single database server fails, it can cause you a lot of problems and trouble. But distributed systems are formatted to deal with such crashes and failures in a configurable way. You can have a 5-node Kafka cluster continue working even if 2 nodes are down or if they fail. However, what must be remembered is that the more fault-tolerant your system is, the less performant it will be.

Let’s define “commit log”

This is also commonly known as a “write-ahead log” or “transaction log.” A commit log is a persistent, ordered data structure that only supports appends. This simply means that you can not change or eliminate any records from it. It guarantees item ordering and is read from left to right. Make sure you also learn apache kafka.

How does Kafka work?

Kafka is a simple data structure. In this, applications or producers send messages or records to a broker. These messages are then processed by other applications, which are commonly referred to as consumers. These records are stored in what is called a “topic,” and consumers have to subscribe to this topic to receive new messages.

If and when these topics get huge, they get split into more compact partitions to improve their performance and scalability. Kafka ensures that all the records present inside a partition are ordered in the sequence they came in.

An offset of a message is the way you distinguish it. You could see this as a normal array index, that is, a sequence number that is incremented for each new message in a partition. This platform follows the famous principle of the dumb broker and the smart consumer. This simply refers to the fact that Kafka does not keep track of what messages or records are read by the consumer and delete them. Instead, what it does is store them for a set amount of time, like a day. It may even do this until some size limit is met. Consumers poll Kafka for new messages and tell it what messages they wish to look at. This gives them the chance to increase or decrease the offset they are at. Thus, it allows them to replay and reprocess events.

What must be paid attention to is that consumers are actually consumer groups, which can contain one or more consumer processes. Each partition is tied to only one consumer process in one group to avoid any two processes reading the same message twice.

Kafka stores the metadata on a device called the Zookeeper.

What is the Zookeeper?

It can be defined as a distributed key-value store. It is widely used for storing metadata and handling the mechanics of clustering (like heartbeats, distribution of updates and configurations, etc.).