Choosing the Right Version Control System for Your Development Projects

In the dynamic world of software development, collaboration and efficient management of codebase are pivotal to success. This is where version control systems (VCS) come into play, serving as a cornerstone for modern development practices. With a plethora of options ...

MapReduce for C: Run Native Code in Hadoop

Google announced the release of MapReduce for C (MR4C), an open source framework that allows you to run native code in Hadoop.

MR4C was originally developed at Skybox Imaging to facilitate large scale satellite image processing and geospatial data science. We ...

How to Run a Simple Apache Spark App in CDH 5

Getting started with Spark (now shipping inside CDH 5) is easy using this simple example.

Apache Spark is a general-purpose, cluster computing framework that, like MapReduce in Apache Hadoop, offers powerful abstractions for processing large datasets. For various reasons pertaining to ...

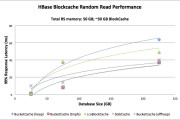

HBase BlockCache Showdown

The HBase BlockCache is an important structure for enabling low latency reads. As of HBase 0.96.0, there are no less than three different BlockCache implementations to choose from. But how to know when to use one over the other? There’s ...

How-to Implement Role-based Security in Impala using Apache Sentry

Apache Sentry (incubating) is the Apache Hadoop ecosystem tool for role-based access control (RBAC). In this how-to, I will demonstrate how to implement Sentry for RBAC in Impala. I feel this introduction is best motivated by a use case.

Data warehouse ...

7 Tips for Improving MapReduce Performance

One service that Cloudera provides for our customers is help with tuning and optimizing MapReduce jobs. Since MapReduce and HDFS are complex distributed systems that run arbitrary user code, there’s no hard and fast set of rules to achieve optimal ...