MapReduce for C: Run Native Code in Hadoop

MR4C was originally developed at Skybox Imaging to facilitate large scale satellite image processing and geospatial data science. We found the job tracking and cluster management capabilities of Hadoop well-suited for scalable data handling, but also wanted to leverage the powerful ecosystem of proven image processing libraries developed in C and C++. While many software companies that deal with large datasets have built proprietary systems to execute native code in MapReduce frameworks, MR4C represents a flexible solution in this space for use and development by the open source community.

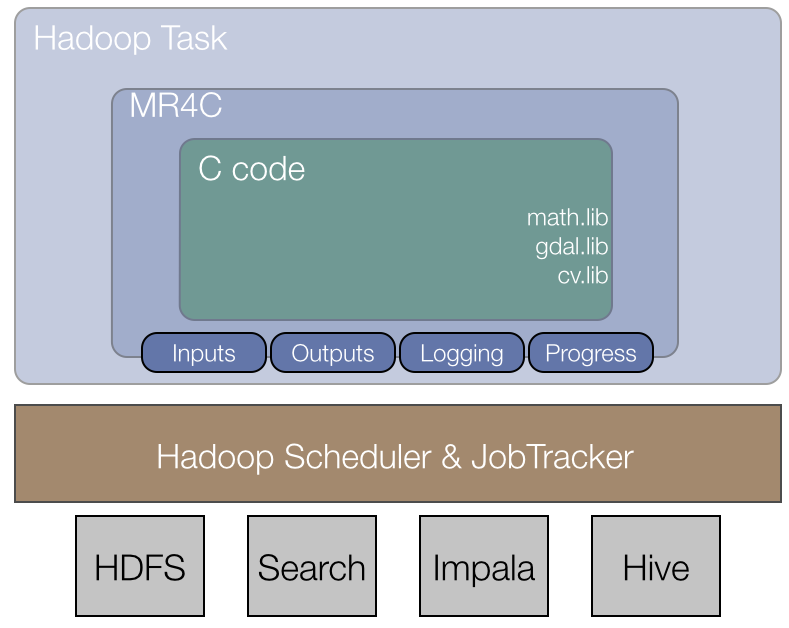

MR4C is developed around a few simple concepts that facilitate moving your native code to Hadoop. Algorithms are stored in native shared objects that access data from the local filesystem or any uniform resource identifier (URI), while input/output datasets, runtime parameters, and any external libraries are configured using JavaScript Object Notation (JSON) files. Splitting mappers and allocating resources can be configured with Hadoop YARN based tools or at the cluster level for MRv1. Workflows of multiple algorithms can be strung together using an automatically generated configuration. There are callbacks in place for logging and progress reporting which you can view using theHadoop JobTracker interface. Your workflow can be built and tested on a local machine using exactly the same interface employed on the target cluster.

If this sounds interesting to you, get started with our documentation and source code at the MR4C GitHub page. The goal of this project is to abstract the important details of the MapReduce framework and allow users to focus on developing valuable algorithms. Let us know how we’re doing in our Google Group. Source