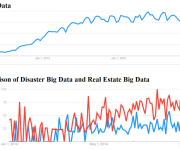

10 Big Data Predictions for 2014

Big data was seen as one of the biggest buzzwords of 2013 and companies are spending a lot on Big data analytics. The storage and analysis of large and/or complex data sets using a series of techniques including, but not ...

Using Scala To Work With Hadoop

Cloudera has a great toolkit to work with Hadoop. Specifically it is focused on building distributed systems and services on top of the Hadoop Ecosystem.

http://cloudera.github.io/cdk/docs/0.2.0/cdk-data/guide.html

And the examples are in Scala!!!!

Here is how you you work with generic stuff on the ...

MongoDB 2.6 Released

In the five years since the initial release of MongoDB, and after hundreds of thousands of deployments, we have learned a lot. The time has come to take everything we have learned and create a basis for continued innovation over ...

How To Choose The Best Tool For Your Big Data Project

Trying to choose the right tool for a big data project? This chart (and three simple rules) can help guide you through the options. This chart is based on one shown by Microsoft Research senior research program manager Wenming Ye ...

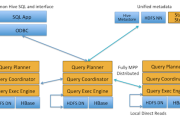

Impala and SQL on Hadoop

The origins of Impala can be found in F1 – The Fault-Tolerant Distributed RDBMS Supporting Google’s Ad Business.

One of many differences between MapReduce and Impala is in Impala the intermediate data moves from process to process directly instead of storing it ...

A guide to NoSQL offerings

Amazon Web Services: DynamoDB is a NoSQL database service that makes it simple and cost-effective to store and retrieve any amount of data and serve any level of request traffic. Users simply tell the service how many requests need to ...

Apache Mahout is moving on from MapReduce

Apache Mahout, a machine learning library for Hadoop since 2009, is joining the exodus away from MapReduce. The project’s community has decided to rework Mahout to support the increasingly popular Apache Spark in-memory data-processing framework, as well as the H2O engine for ...

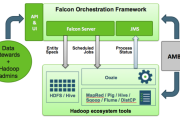

Apache Falcon-Data Governance for Hadoop

Apache Falcon is a data governance engine that defines, schedules, and monitors data management policies. Falcon allows Hadoop administrators to centrally define their data pipelines, and then Falcon uses those definitions to auto-generate workflows in Apache Oozie.

InMobi is one of ...

How to Contribute to HBase and Hadoop2

By Nick Dimiduk

In case you haven’t heard, Hadoop2 is on the way! There are loads more new features than I can begin to enumerate, including lots of interesting enhancements to HDFS for online applications like HBase. One of the most ...

How-to Implement Role-based Security in Impala using Apache Sentry

Apache Sentry (incubating) is the Apache Hadoop ecosystem tool for role-based access control (RBAC). In this how-to, I will demonstrate how to implement Sentry for RBAC in Impala. I feel this introduction is best motivated by a use case.

Data warehouse ...