Google BigQuery and Datastore Connectors for Hadoop

Users of Google’s cloud platform should find it easier to run Hadoop jobs directly against data in Google BigQuery and Google Cloud Datastore from now on.

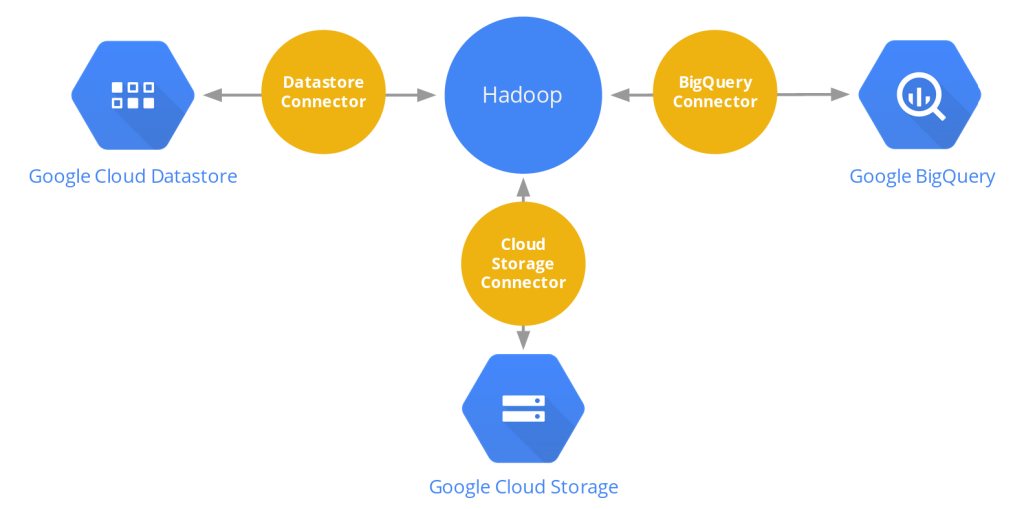

we are making it easier for you to run Hadoop jobs directly against your data in Google BigQuery and Google Cloud Datastore with the Preview release of Google BigQuery connector and Google Cloud Datastore connector for Hadoop. The Google BigQuery and Google Cloud Datastore connectors implement Hadoop’s InputFormat and OutputFormat interfaces for accessing data. These two connectors complement the existing Google Cloud Storage connector for Hadoop, which implements the Hadoop Distributed File System interface for accessing data in Google Cloud Storage.

The connectors can be automatically installed and configured when deploying your Hadoop cluster using bdutil simply by including the extra “env” files:

- ./bdutil deploy bigquery_env.sh

- ./bdutil deploy datastore_env.sh

- ./bdutil deploy bigquery_env.sh datastore_env.sh

Here are some word-count MapReduce code samples to get you started:

- Using the BigQuery connector

- Using the Datastore connector

- Using the Datastore connector for reading data and using the BigQuery connector for publishing results

As always, we would love to hear your feedback and ideas on improving these connectors and making Hadoop run better on Google Cloud Platform. Source