Hadoop Cluster Interview Questions

Which are the three modes in which Hadoop can be run?

The three modes in which Hadoop can be run are:

1. standalone (local) mode

2. Pseudo-distributed mode

3. Fully distributed mode

What are the features of Stand alone (local) mode?

In stand-alone mode there are ...

16 Top Big Data Analytics Platforms

Revolutionary. That pretty much describes the data analysis time in which we live. Businesses grapple with huge quantities and varieties of data on one hand, and ever-faster expectations for analysis on the other. The vendor community is responding by providing ...

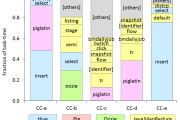

Introduction to Apache Hive and Pig

Apache Hive is a framework that sits on top of Hadoop for doing ad-hoc queries on data in Hadoop. Hive supports HiveQL which is similar to SQL, but doesn't support the complete constructs of SQL.

Hive coverts the HiveQL query into ...

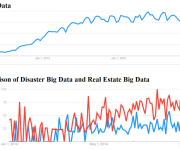

Top 10 Big Data Trends in 2014

In January 2014, IDG published their latest big data enterprise survey and predictions for 2014 finding that on average, enterprises will spend $8M on big data –related initiatives in 2014. The study also found that 70% of enterprise organizations have ...

HBase Architecture

HBase – The Basics:

HBase is an open-source, NoSQL, distributed, non-relational, versioned, multi-dimensional, column-oriented store which has been modeled after Google BigTable that runs on top of HDFS. ‘’NoSQL” is a broad term meaning that the database isn’t an RDBMS which ...

Use Cases Of MongoDB

MongoDB is a relatively new contender in the data storage circle compared to giant like Oracle and IBM DB2, but it has gained huge popularity with their distributed key value store, MapReduce calculation capability and document oriented NoSQL features.

MongoDB has ...

Introduction to Impala

Impala in terms of Hadoop has got the significance because of its,

Scalability

Flexibility

Efficiency

What’s Impala?

Impala is…

Interactive SQL–Impala is typically 5 to 65 times faster than Hive as it minimized the response time to just seconds, not minutes.

Nearly ANSI-92 standard and compatible with ...

MongoDB Interview Questions

What were you trying to solve when you created MongoDB?

We were and are trying to build the database that we always wanted as developers. For pure reporting, SQL and relational is nice, but when building data always wanted something different: ...

Hadoop Interview Questions – MapReduce

Looking out for Hadoop Interview Questions that are frequently asked by employers?

What is MapReduce?

It is a framework or a programming model that is used for processing large data sets over clusters of computers using distributed programming.

What are 'maps' and 'reduces'?

'Maps' ...

3 Tools Companies Can Use to Harness the Power of Big Data

To the individual user, Big Data might simply mean a new 3-terabyte hard drive, which can be acquired for a hundred bucks or so. But real Big Data projects require clusters of servers, vast amounts of storage, and specialized software ...