Beyond Compliance: How Predictive Gaze Modeling Enhances Digital Accessibility

Understanding Predictive Gaze Modeling in Accessibility

Creating truly accessible digital content is essential for inclusivity. While developers often focus on technical compliance, understanding how users with diverse needs visually interact with a page is a crucial, often overlooked, layer of accessibility. This is where predictive gaze modeling comes in.

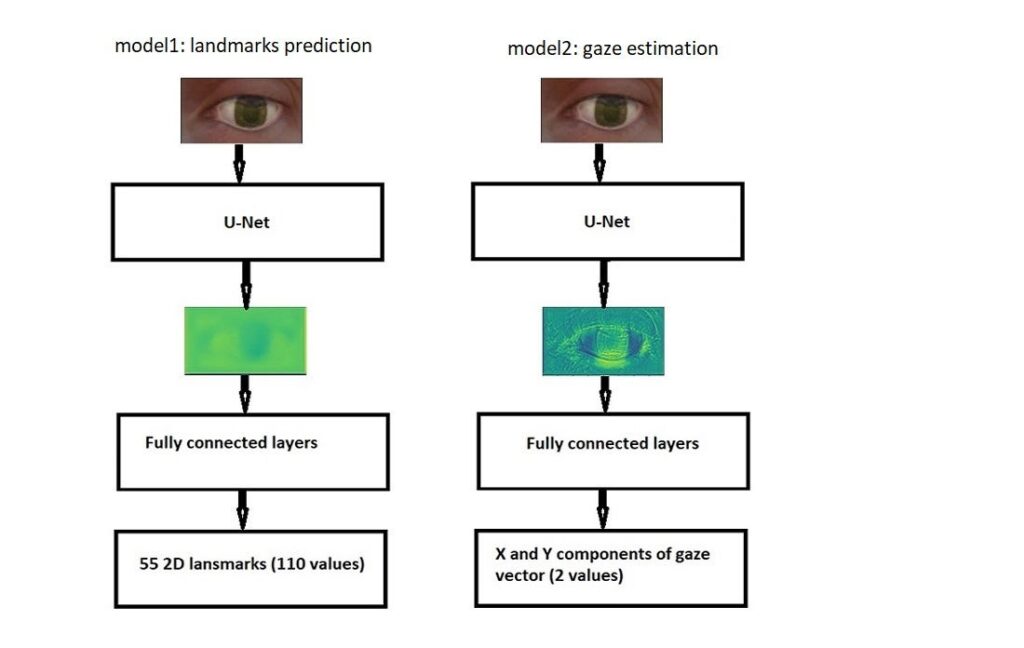

Predictive gaze modeling uses AI to analyze visual attention patterns, anticipating where users are most likely to look on a screen within the first few seconds. By understanding these innate patterns, designers can optimize layouts, navigation, and content presentation to make interfaces more intuitive and inclusive for everyone.

A Crucial Partner for Accessibility Audits

Tools that audit for accessibility, such as the widely-used WAVE extension or axe DevTools, often check for technical requirements like color contrast, font sizes, and proper HTML structure. These are vital, but they don’t answer a critical question: “Will a user notice the most important information?” Predictive eye-tracking serves as a powerful validation tool to answer exactly that.

It provides rapid, data-driven insights into the visual hierarchy of a design. For designers and UX professionals tasked with ensuring compliance with the Web Content Accessibility Guidelines (WCAG), this is invaluable. According to usability experts, reducing visual clutter and cognitive load is a cornerstone of effective design, as complex interfaces can overwhelm users and hinder task completion. Before running user tests, a predictive heatmap can instantly reveal if a critical call-to-action is visually lost or if a complex navigation menu creates cognitive overload. This allows teams to fix fundamental layout issues early, saving time and resources.

Practical Applications for Inclusive Design

Predictive gaze modeling is not a replacement for comprehensive accessibility testing but a fast, scalable first step. It helps address visual barriers that affect all users, but particularly those with cognitive or visual impairments.

For example, a cluttered design with multiple competing visual elements can be disorienting. A predictive gaze model helps designers identify and simplify these “visual hotspots,” creating a clearer, more logical path through the content. It validates whether the intended reading order—for instance, from a headline to a key benefit, to a button—is what users will intuitively follow. By ensuring the design guides the eye naturally, we create a more seamless experience for users who may struggle with processing complex information.

Integrating Predictive Insights into UX Workflows

For UX and CRO teams, predictive attention tools can be integrated directly into their accessibility workflows. They can function as a standalone check or as an API that complements other automated testing suites. By combining technical compliance checks with predictive visual data, organizations can move beyond simply meeting standards. They can proactively design digital experiences that are not just usable, but genuinely empathetic, fair, and effective for a diverse user base, solidifying their commitment to an inclusive digital world.