5 technologies that will help big data cross the chasm

We’re on the cusp of a real turning point for big data. Its applications are becoming clearer, its tools are getting easier and its architectures are maturing in a hurry. It’s no longer just about log files, clickstreams and tweets. ...

A New Python Client for Impala

The new Python client for Impala will bring smiles to Pythonistas!

As a data scientist, I love using the Python data stack. I also love using Impala to work with very large data sets. But things that take me out of ...

Cloudera, MongoDB partner to mash up NoSQL, Hadoop

Hadoop specialist Cloudera announced a strategic partnership with MongoDB this week that will allow Cloudera customers to store Hadoop data in their NoSQL MongoDB databases. The move is a huge win for MongoDB, which is quickly emerging as one of ...

Bringing the Best of Apache Hive 0.13 to CDH Users

More than 300 bug fixes and stable features in Apache Hive 0.13 have already been backported into CDH 5.0.0.

Last week, the Hive community voted to release Hive 0.13. We’re excited about the continued efforts and progress in the project and ...

Intro to Machine Learning

Machine learning is sub set of artificial intelligence and it is study of systems that can learn from data. A machine learning system could be trained. Core of machine learning deals with representation and generalization.

Machine learning is a "Field of ...

10 Hadoop Hardware Leaders

Hadoop software is designed to orchestrate massively parallel processing on relatively low-cost servers that pack plenty of storage close to the processing power. All the power, reliability, redundancy, and fault tolerance is built into the software, which distributes the data ...

Using Apache Hadoop and Impala together with MySQL for data analysis

Apache Hadoop is commonly used for data analysis. It is fast for data loads and scalable. In a previous post I showed how to integrate MySQL with Hadoop. In this post I will show how to export a table from ...

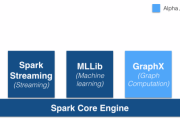

How to Run a Simple Apache Spark App in CDH 5

Getting started with Spark (now shipping inside CDH 5) is easy using this simple example.

Apache Spark is a general-purpose, cluster computing framework that, like MapReduce in Apache Hadoop, offers powerful abstractions for processing large datasets. For various reasons pertaining to ...

Apache Spark is now part of MapR’s Hadoop distribution

Hadoop vendor MapR is getting in early on the Apache Spark action, too, announcing on Thursday that it’s adding the Spark stack to its Hadoop distribution as part of a partnership with Spark startup Databricks (Ion Stoica, the co-founder and CEO of ...

Using Scala To Work With Hadoop

Cloudera has a great toolkit to work with Hadoop. Specifically it is focused on building distributed systems and services on top of the Hadoop Ecosystem.

http://cloudera.github.io/cdk/docs/0.2.0/cdk-data/guide.html

And the examples are in Scala!!!!

Here is how you you work with generic stuff on the ...