7 Keys To Building A Successful Big Data Infrastructure

The infrastructure you build for big data, whether you’re looking at software or hardware, will have a huge impact on the analysis and action your big data systems will support. Here are 7 factors that can make a big difference when building your big data architecture.

1.Big Data Is More Than Hadoop

In casual conversation, big data and Hadoop are often used almost interchangeably. That’s unfortunate because big data is much more than Hadoop. Hadoop is a file system (not a database) that’s designed to spread data across hundreds or thousands of processing nodes. It is used in a lot of big data applications because, as a file system, it’s great at dealing with data that isn’t structured — that doesn’t even look like the data around it. Of course, some big data is structured, and for that you’ll want a database. But that’s a different item on the list.

2.Hive And Impala Bring Database To Hadoop

Ahh, the database for the structured part of your big data world. This can get a little confusing, so hang on. If you want to bring some order to your Hadoop data platform, then Hive can be the ticket. It’s an infrastructure tool that allows you to do SQL-like things to the very un-SQL Hadoop.

If you have some part of your data that easily fits inside a structured database, then Impala is a database designed to live within Hadoop — and it also makes use of Hive commands you might have developed on the journey from Hadoop to SQL. All three of these (Hadoop, Hive, and Impala) are Apache projects, so they’re open source. Have fun.

3.Spark Is For Processing Big Data

So far, we’ve been talking about storing and organizing data. But what about when you want to actually do something with the data? That’s when you need an analytical and processing engine like Spark. Spark is yet another Apache project, and here it stands in for a bunch of open source and commercial products that will take the data you shoved into your lakes, warehouses, and databases and do something useful with it.

4.You Can Do SQL On Big Data

Lots and lots of people know how to build SQL databases and write SQL queries. That expertise doesn’t have to go to waste when the playing field moves to big data. Presto is an open source SQL query engine that allows data scientists to use SQL queries to interrogate databases that live in everything from Hive to proprietary commercial database management systems. It’s used by little companies like Facebook for interactive queries, and that phrase is key. Think of Presto as a tool for doing ad hoc, interactive queries on enormous data sets.

5.Online Storage

There are some tasks within big data that involve rapidly changing data. Sometimes, this is data that is being added to on a regular basis, and sometimes it’s data that is changed through the analysis.

6.Cloud Storage

When the analysis is taking place on larger, aggregated databases for which you’re building big, batch-oriented routines, then the cloud can be perfect. Aggregate and transfer the data to the cloud, run the analysis, and then tear down the instance. It’s exactly the sort of elastic demand response the cloud does so well.

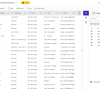

7.Visualization

It’s one thing to analyze big data. It’s quite another to present the analysis in a way that makes sense to most human beings. The picture can help quite a lot with the whole “making sense” thing, and so data visualization should be considered a critical part of your big data infrastructure.

Fortunately, there are a lot of ways to make great images happen, from JavaScript libraries, to commercial visualization packages, to online services. What’s the most important point? Pick a handful of these, try them, and let your users try them. You’ll find that solid visualization is the best way to make your big data analysis as valuable as possible. Source