The 3 most common ways data junkies are using Hadoop

Common patterns of Hadoop use

Hadoop was originally conceived to solve the problem of storing huge quantities of data at a very low cost for companies like Yahoo, Google, Facebook and others. Now, it is increasingly being introduced into enterprise environments to handle new classes of data. Machine-generated data, sensor data, social data, web logs and other such types are growing exponentially, but also often (but not always) unstructured in nature. It is this type of data that is turning the conversation from “data analytics” to “big data analytics”: because so much insight can be gleaned for business advantage.

Analytic applications come in all shapes and sizes–and most importantly, are oriented around addressing a particular vertical need. At first glance, they can seem to have little relation to each other across industries and verticals. But in reality, when observed at the infrastructure level, some very clear patterns emerge: they can fit into one of the following three patterns.

Pattern 1: Data refinery

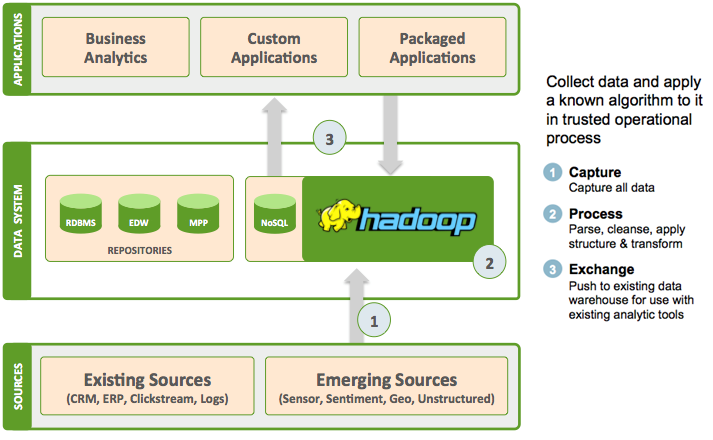

The “Data Refinery” pattern of Hadoop usage is about enabling organizations to incorporate these new data sources into their commonly used BI or analytic applications. For example, I might have an application that provides me a view of my customer based on all the data about them in my ERP and CRM systems, but how can I incorporate data from their web sessions on my website to see what they are interested in? The “Data Refinery” usage pattern is what customers typically look to.

The key concept here is that Hadoop is being used to distill large quantities of data into something more manageable. And then that resulting data is loaded into the existing data systems to be accessed by traditional tools–but with a much richer data set. In some respects, this is the simplest of all the use cases in that it provides a clear path to value for Hadoop with really very little disruption to the traditional approach. No matter the vertical, the refinery concept applies. In financial services, we see organizations refine trade data to better understand markets or to analyze and value complex portfolios. Energy companies use big data to analyze consumption over geography to better predict production levels. Retail firms (and virtually any consumer-facing organization) often use the refinery to gain insight into online sentiment. Telecoms are using the refinery to extract details from call data records to optimize billing. Finally, in any vertical where we find expensive, mission critical equipment, we often find Hadoop being used for predictive analytics and proactive failure identification. In communications, this may be a network of cell towers. A restaurant franchise may monitor refrigerator data.

Pattern 2: Data exploration with Apache Hadoop

The second most common use case is one we call “Data Exploration.” In this case, organizations capture and store a large quantity of this new data (sometimes referred to as a data lake) in Hadoop and then explore that data directly. So rather than using Hadoop as a staging area for processing and then putting the data into the enterprise data warehouse–as is the case with the Refinery use case–the data is left in Hadoop and then explored directly. Read more